SQL – Explanation of Big Data

Let’s try to get the answer of some questions to understand about Big Data

What is Big Data?

This is really very interesting topic; we all know data is growing every single day. The McKinsey Global Institute estimates that data volume is growing 40% per year, and will grow 44x between 2009 and 2020. Now we can think at what volume data is growing. But the volume of data is not the only characteristic that matters. So here we have another question:

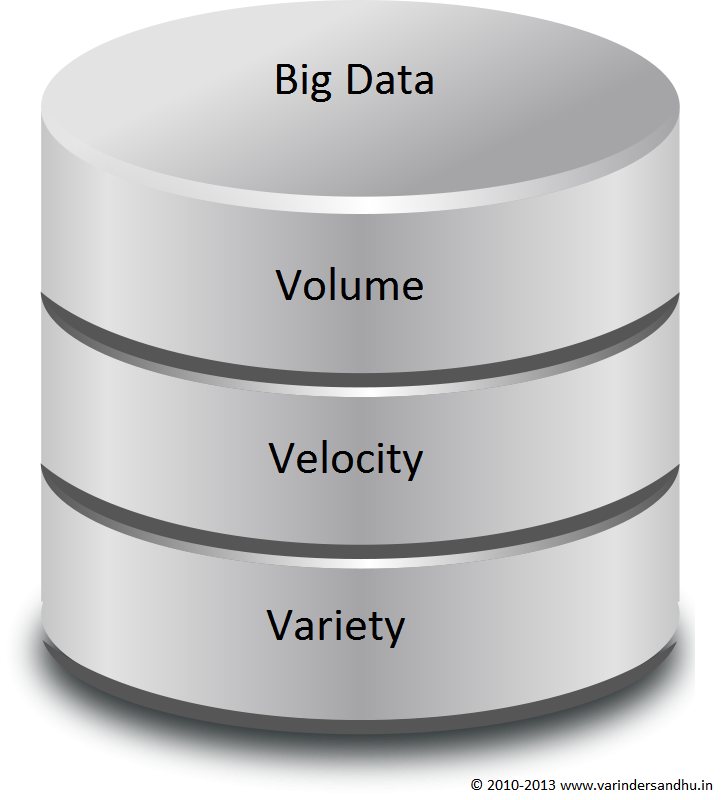

What are the characteristics that define Big Data?

There are three key characteristics that define big data:

- Volume – Size of data

- Velocity – Frequency of Growing Data

- Variety – Data in the different formats

Now, we know that data is growing at large extent and a relational database is not able to handle the processing of large amount of the data beyond the certain extent. But we also know the importance of data in our life. Social Networking Sites like Facebook and LinkedIn wouldn’t exist without big data. Their business model requires a personalized experience on the web, which can only be delivered by capturing and using all the available data about a user or member. Now, may be you are thinking then how we are handling the processing of Big Data.

What is NoSQL (Not only SQL)?

A NoSQL database provides a mechanism for storage and retrieval of data that use looser consistency models than traditional relational databases in order to achieve horizontal scaling and higher availability.

NoSQL databases are used to store big data. They are well suited for dynamic data structures and are highly scalable. The high variety of data is typically stored in a NoSQL database because it simply captures all data without categorizing and parsing the data.

Difference between NoSQL database and Relational Database

NoSQL databases are designed to capture all data without categorizing and parsing data, and therefore the data is highly varied. On the other hand, Relational databases typically store data in well-defined structures and impose metadata on the data captured to ensure consistency and validate data types.

What is Hadoop?

Hadoop is an open source framework that supports the processing of large data sets in a distributed computing environment and enables application to work with Big Data. It was designed to run on a large number of machines that don’t share memory or disks, like the shared-nothing model. Hadoop technology maintains and manages the data among all the independent machines. Additionally, a single data can be shared on multiple machines which gives availability of the data in case of the disaster or single machine failure. Facebook and Yahoo are using the Hadoop to process their Big Data.

Hadoop was derived from Google’s MapReduce.

What is MapReduce?

A MapReduce program Include two steps Map and Reduce. Map step that performs filtering and sorting and a Reduce step that performs a summary operation.

In Map step master node takes input and divides into simple smaller chunks and provides it to other worker node. In Reduce step it collects all the small solution of the problem and returns as output in one combined answer.

Hadoop was derived from Google’s MapReduce.

Refer : http://gigaom.com/2012/07/07/why-the-days-are-numbered-for-hadoop-as-we-know-it/

I have been tracking NoSQL databases for several years, collecting publicly available data on skills and vendors. The market is tiny. Summary of data in section 2: https://speakerdeck.com/abchaudhri/considerations-for-using-nosql-technology-on-your-next-it-project-1 Slides regularly updated as I find new data.